Senior Developer at Peninsula Data Solutions.

Bulk Data Loading for the Sullom Voe Environmental Database

Importing data in bulk can be difficult to implement and can result in a negative experience for users if it is done poorly. By creating a standardised import module framework that considered the users' interactions at every stage, PDS were able to deliver an effective solution that empowered users to own their own data.

Background

Peninsula Data Solutions Ltd (PDS) were commissioned by Ocean Ecology Ltd (OE) to extend an existing web application (ABACUS) previously developed by PDS for OE, by adding a comprehensive, validated data import module. The data import module would be used to store a digitised dataset of marine chemical, sediment and species from 31 separate years from 1977 to 2018 – the Sullom Voe Environmental Database (SVED) managed by the Shetland Oil Terminal Environmental Advisory Group (SOTEAG).

The ABACUS application was already purpose built as a standardised platform for marine ecological data that complied with national and international recognised data standards and so importing the SVED data into it would achieve both the requirement to digitise the existing dataset while cleansing it and improve data quality throughout.

However, ABACUS was initially designed as a tool for recording data as it was collected so it did not offer functionality to bulk create/add data. As the intended dataset contained over 11,000 sample events and over 125,000 observations of marine species a solution was needed to allow bulk import and validation of data. Data would need to be imported for Stations, Sample Events, Species, 3 kinds of Sediment Analysis, 14 kinds of Chemical Analyses.

While PDS are experienced using SQL Server Data Tools for data migration, cleansing and integration tasks, the client requirements in this case demanded a extension to the ABACUS application that was usable and intuitive for non-technical users.

Solution

Process

The requirement to perform validation on the data (valid data types, decimal places, date ranges etc.), enforcing referential and business constraints, as well as checking against reference lists informed the design to separate 'staging' areas for the data where it could be checked and cleansed, before becoming available in other 'live' areas of the application.

The process started with users selecting an import type and uploading an Excel file using the website. Detailed Excel templates were created containing instructions and columns. Initial checks on the Excel file were performed by the website before a creating extracting data out of the Excel file into a datatable. The datatable was loaded into staging tables in the database using stored procedures with user defined type parameters. Once loaded, a separate lookup stored procedure would retrieve IDs based on text values found in the source data. A validation stored procedure could be run on demand by users on the website to check for data quality issues and attempt to fix errors before trying again. Once validated had passed the import stored procedure could be run which would copy data from the staging area to the 'live' tables.

Loading data into staging tables before performing validation meant it was possible store data in the database as soon as possible, making the validation process easier, and providing other useful features such as viewing loaded data (even if there were errors) and inclusion in reports.

Keeping data in separate staging tables also meant there was no risk of non-validated data being accidentally used in areas of the website used for real data and real world tasks.

The structure of staging tables were matched as closely as possible the respective 'live' table but allowing text values wherever possible so that data type errors could be reported to users along with all other validation issues.

Extensible and Flexible

A design for the import module was chosen that would maximise the extensibility and flexibility by identifying tasks that would be common across all import types, and areas where behaviour would vary by type of import. To achieve this in practice PDS made use of (amongst other techniques): abstract classes and inheritance; and nested stored procedures.

public abstract class StgAreaDT

{

public DataTable SourceDataTable { get; set; }

public string SourceSheetName { get; set; }

public abstract void VerifyExcelFile(IFormFile excelFile);

public abstract void LoadParseExcelFile(byte[] fileBytes);

}

The simplified pseudocode above shows an example abstract class. Each import type would then inherit from the abstract class, and only need to define custom logic for the LoadParseExcelFile method (pulling data out of the required columns in the Excel file and loading into the SourceDataTable object which would be sent to the database and stored in the staging table).

Validated

Once data was loaded into a staging table, the task of validating the data was relatively simple. Validation procedures, written for each type of import, included relatively simple SQL statements would insert ‘validation failure records’ into a validation errors table. Tables were also created to contain Error Types giving a normalised design.

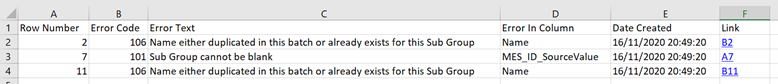

Once this data was available in the database it was also quite easy to return this data back to the user, and even possible to return this as an extra worksheet in the original import (Excel) file. With some clever Excel formulae, hyperlinks were even created in the error report in the Excel file to link directly to the problem cell.

Values in column F in the screenshot contained hyperlinks to the problem cells on the source data tab

Results

There were obvious benefits of digitising the 42+ year dataset that SOTEAG had collected but those benefits are out of the scope of this case study, instead the focus here is on the design of the import module and its benefits to extensibility and data quality.

Achieved balance of bespoke logic and standardised import framework.

The use of common reusable elements meant that additional import types could be added quickly and that maintenance of common elements is efficient. Once the framework was set up, minimal additional code (and only import type-specific code for that matter) needed to be added.

By identifying common tasks and type-specific tasks and accommodating both in the design PDS were also able to implement some very bespoke behaviour. For instance, one type of import could load data stored in a matrix format in the Excel file (with an changeable number of columns) rather than a typical table layout. This was achieved entirely in the LoadParseFileExcel() method discussed above. This bespoke logic was entirely contained in this method in an already existing type-specific class and therefore had no impact elsewhere in the process, once the data was loaded into the SourceDataTable, the following steps of the process were no different.

Validation approach

Design choices such as storing data in staging tables, reusable common stored procedures, but also enabling bulk import of lookup lists meant that PDS were able to implement both common and type-specific data quality checks. Storing data in a staging table and allowing the validation process to be run repeatedly meant users could attempt to fix problems while the data resided in the database and then iteratively fix problems and retry validation, rather than being forced to reload the entire file. Providing results in Excel files with direct links to cells containing problem data gave users a quick and intuitive way of viewing and fixing problems.

Summary

The bespoke import module was developed to import over 20 different types of data including some with unknown numbers of columns. The module enabled the import of hundreds of thousands of rows of data and millions of data points. For non-technical users it was easy to use, with a consistent process for all types of data, and provided a detailed report of any validation issues making it clear how to fix any problems. Once the solution was implemented, end users were able to manage the process of importing all their data with minimal support from technical staff and developers.

If your organisation needs to perform data imports on a regular basis or of a large historical dataset, PDS can offer:

- A real-world proven import module framework

- A solution that could bolt on to existing application, or exist as a standalone application

- A full data management support service for complicated data import tasks

- Data quality services, including data cleansing, migration and analytics

Contact us to find out more

Peninsula Data Solutions

S10

Plymouth Science Park

Plymouth

PL6 8BX